Guide to Creating a Pentesting Report

The structure, approach, and importance of a well-crafted security assessment report

We’ve seen, and created, thousands of pentesting reports for customers. So we’ve built up a pretty good knowledge of how a successful report should be approached and structured.

Writing effective penetration testing reports is unlikely to be your favourite thing about pentesting. But the report you produce at the end of a project is vital in proving your worth to your clients.

Achieving an effective report is a balancing act. You want to demonstrate how much work went into the project, but whilst the report may be the end of the engagement for your team, it’s just the beginning for another.

The report you create will be used to plan a remediation project and determine the resources required for that project. Your report needs to make it easy to achieve that.

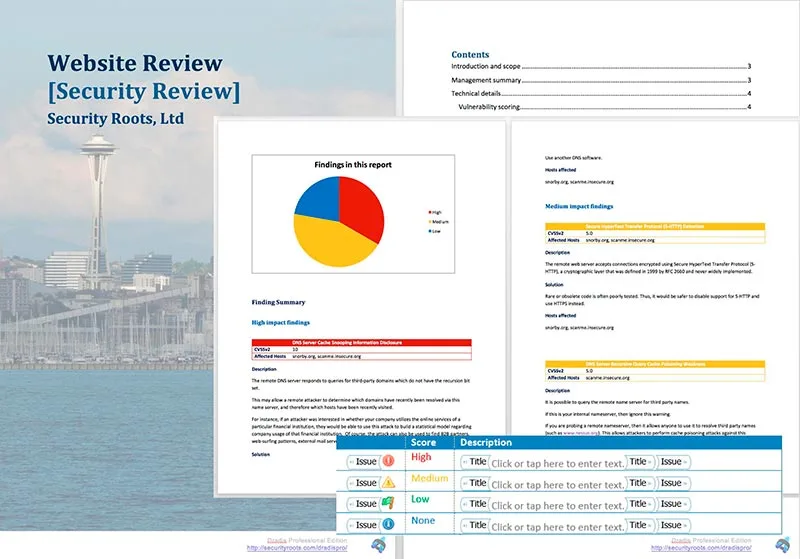

This guide focuses on creating a pentest report manually, if you’re looking for an automated pentest report generator, check out Dradis Pro.

TL;DR

You need to understand the project goals, and realise that the report will have multiple audiences of different technical expertise. Make sure that the deliverable reflects the issues uncovered and documents the coverage attained and the process involved in doing so.

Ensure there is enough technical information for the project team with sufficient knowledge on the issues uncovered, the best mitigations to apply and a means to verify their reviewed implementation and you will have succeeded in your role of trusted security advisor.

Your report should cover:

- How you found the issue

- The root cause of the vulnerability

- How difficult it was to take advantage of the vulnerability

- Whether the vulnerability can be used for further access

- The potential impact on the organization

- How can it be fixed or mitigated

Before starting your security report writing

Creating an effective report begins before you start to write it. There are steps to take, and best practices to follow.

1. Set a clear goal

The security report produced after each engagement should have a clear goal. The goal needs to be aligned with the company's/client’s high-level objectives.

A typical goal could be: “our objective is to secure the information”. This can be a good starting point albeit somewhat naive in all except the most simple cases. A more concrete goal such as “make sure that traveller A can’t modify the itinerary or get access to traveller B’s information” would produce a better outcome.

This goal can then be developed into a goal for the security report.

If we keep it simple and focus on the broader goal of “securing the information”, the goal of the security report needs to be to communicate the results of the test and provide the client with actionable advice they can use to achieve that goal.

2. Establish the audience for the security report

If you’re an in-house security team, this should be pretty simple. If you’re writing a report as a consultancy it is important to understand the audience of the deliverable you are producing.

Who do you think is going to be reading the report your firm has produced? Is this going to be limited to the developers building the solution, or the IT team managing the servers? Or, as is more likely, will the development manager or the project lead for the environment will want to review the results?

You need to understand whether the final report will have an entirely technical audience, or whether there are less technical stakeholders involved.

If your results expose a risk that is big enough for the organisation, the report can go up the ladder, to the CSO, CTO or CEO.

In most cases, your report will have multiple audiences with different technical profiles. You need to avoid creating a completely technical document full of details; nor should you deliver a high-level overview with a summary of the highlights of the test appropriate only for consumption by C-level execs.

3. Get an evidence-storing process in place

The better you are at note-taking, and evidence storage whilst testing, the more efficient you will be at putting your report together.

You should have a central location for storing screenshots that will make it into the Technical Details section of your report.

4. Make sure you have a process for collating results

If multiple pen testers are working on a report, you need to avoid the situation where reporting time is spent collating results from each team member.

Reporting time shouldn’t be collation time. All information must be available to the report writer before the reporting time begins. And it must be available in a format that can be directly used in the report.

5. Coverage

How good was the coverage attained during the testing phase of the engagement? Was no stone left unturned? Do you have evidence of the issues you uncovered and proof of the areas that were tested but were implemented securely and thus didn’t yield any findings? If not, the task of writing the final report is going to be a challenging one.

Using testing methodologies can improve your consistency and maximise your coverage raising the quality bar across your projects. Following a standard methodology will ensure that you have gathered all the evidence you need to provide a solid picture of your work in the final deliverable.

The pentest report structure

We’re not to provide a blow-by-blow breakdown of all the possible sections and structure you need to provide, there are some comprehensive resources on the net that go beyond what we could accomplish here (see Reporting – PTES or Writing a Penetration Testing Report).

Instead we’ll focus on the overall structure as well as the approach and philosophy to follow when crafting the report to ensure you are producing a useful deliverable. At a very high level, the report content must be split between:

- Executive summary

- Recommendations/Remediations

- Technical details

- Appendices

Executive summary

Objective: An explanation of the vulnerabilities discovered, and their impact on the environment/users.

Length: Between one and two pages.

Contents:

This is the most important section of the report and it is important not to kid ourselves into thinking otherwise. It's the section that’s most likely to be read by every single person going through the report.

The project was commissioned not because of an inherent desire to produce a technically secure environment but because there was a business need driving it. Call it risk management, strategy, or marketing, it doesn’t matter. The business decided that a security review was necessary and we have to provide the business with a valuable summary.

It is important to keep this in mind and to make sure that the section is worded in a language that doesn’t require a lot of technical expertise to understand. Avoid talking about specific vulnerabilities and focus on the impact these vulnerabilities have on the environment or its users.

Don’t settle with just throwing in a bunch of low-end issues to the conclusions (e.g. “HTTPs content cached” or “ICMP timestamps enabled”) just to show that you uncovered something. If the environment was relatively secure and only low-impact findings were identified, just say so, your client will appreciate it.

Frame the discussion around the integrity, confidentiality and availability of data stored, processed and transmitted by the application. Just covering the combination of these 6 concepts should give you more than enough content to create a decent summary (protip: meet the McCumber cube).

Apart from the project’s conclusions and recommendations, it is important that this section contains information about the scope of the test and that it highlights any caveats that arose during the engagement.

It offers you protection should the client decide to challenge your results, approach or coverage attained. If a host or a given type of attack was requested by the client to be out of scope, this needs to be clearly stated.

If there were important issues affecting the delivery (e.g. the environment was offline for 12 hours) these also have to be reflected in your executive summary.

Recommendations

Objective: Provide remediation recommendations. Which vulnerabilities should be tacked first, and which can be a longer-term implementation.

Length: One to two pages, you want to cover all of your remediation recommendations in the report, but not necessarily in this section.

Contents:

Your report needs to provide an actionable set of recommendations for the client, or remediation team to implement. Even if the team creating the report is also responsible for remediation.

This section should highlight recommendations, in order of importance. A scoring system, such as the Common Vulnerability Scoring System (CVSS) or DREAD can help to structure this section.

If you can’t fit all of your findings on one or two pages then focus on the findings of critical and high importance, and include others in the appendix.

Technical details

Objective: Deliver details on how identified security flaws can be remediated

Length: As long as is required. Try to be clear and concise in your detailing of issue identification and remediation suggestions. Use screenshots where useful.

Contents:

- Your methodology

- Objectives

- Scope

- Details

- Attack chains

Using a shared issues library could save you a lot of time here. Dradis users find a lot of value in maintaining a customized issues library. If you’re not using an automated pentest reporting tool, then you should consider building your own issues library in Word or Excel to simplify the process of putting together the technical details section.

Issues libraries can streamline your process, and help ensure consistency of language and rating across projects.

When writing this section you should be considering the re-test. If six months down the line a re-test is requested, would any of your colleagues be able to reproduce your findings using exclusively the information you have provided in the report?

Non-obvious things that usually trip you over are details about the user role you were logged in as when you found the issue, or remembering to include the series of steps you followed from the login form to the POST request that exposed the issue. Certain issues will only be triggered if the right combination of events and steps is performed. Documenting the last step in the process doesn’t usually provide a solid enough base for a re-test.

Finally; everything needs to be weighted and measured against the engagement goals and the business impact to the client.

Appendices

Objective: Further technical details, scan outputs.

Length: There’s no limit to this section. Keep descriptions succinct, but cover all issues.

Contents:

The Appendices should contain information that while not key to understanding the results of the assessment would be useful for someone trying to gain a better insight into the process followed and the results obtained.

An often overlooked property of the Appendices section is that it provides a window to the internal processes followed by the testing team in particular and the security firm in general.

Putting a bit of effort into structuring this section and providing a more transparent view of those processes would help to increase the transparency of your operations. In the majority of cases, this additional or supporting information is limited to scan results or tool output.

You should also include a breakdown of the methodology used by the team, detailing the areas assessed during this particular engagement along with the evidence gathered for each task in the list to either provide assurance about its security or reveal a flaw.

How to differentiate yourself with your report

This is less relevant for in-house security teams, but for consultancies, your report is in many ways your product. It’s your final output, and it’s what clients may use to compare you against other consultancies.

The most important thing is to ensure that your report:

- Offers a comprehensive view of issues

- Structures identified issues in order of impact/importance

- Clearly and concisely details why issues are important

- Clearly and concisely details how to remediate issues

To make sure that your report stands out, consider:

- Tailoring your report to your audience.

- Simplifying complex topics, whilst also including technical detail.

- Improve your team collaboration, whether through a pentest collaboration tool, or internal processes.

- Proofread. Typos can damage credibility.

- Consider the design. The quality of the content is always the most important thing, but when considering the different audiences who will be reading the report, some will appreciate a sleek design more than others.

Want to save time on creating your penetration testing report?

Reporting can be time-consuming. And whilst it’s a vital part of what you do, it can feel like your time would be better-spent testing. You can streamline your report creation through improved processes, or with a collaboration and reporting tool.

Dradis users tell us that they save six hours per report on average. That’s because they can:

- Merge findings from multiple tools

- Automatically replace the Issue's body with a custom IssueLibrary entry

- Use templates to implement consistent methodologies, and communicate with their team within the context of the work.

Get a demo to see how much time your team could save: